Classification with NNs - Pytorch lightning

This final notebook shows how to adapt the classification examples by means of Feed-forward NNs for Pytorch-lightining, a Python library built on top of Pytorch to ease research and scaling of models to complex hardware (e.g., multi-gpu)

%matplotlib inline

import random

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import pytorch_lightning as pl

import scooby

from mpl_toolkits.mplot3d import Axes3D

from sklearn.datasets import make_moons

from sklearn.metrics import accuracy_score

from torch.utils.data import TensorDataset, DataLoader

from torchsummary import summary

from dataset import make_train_test

from model import SingleHiddenLayerNetwork

from utils import set_seed

set_seed(42)True# define the LightningModule

class LitClassifier(pl.LightningModule):

def __init__(self, network, criterion):

super().__init__()

self.network = network

self.criterion = criterion

def training_step(self, batch, batch_idx):

# training_step defines the train loop.

x, y = batch

yprob = self.network(x)

ls = self.criterion(yprob, y)

# Logging to TensorBoard

self.log("train_loss", ls)

return ls

def configure_optimizers(self):

optimizer = torch.optim.SGD(self.parameters(), lr=1)

return optimizerCreate input dataset

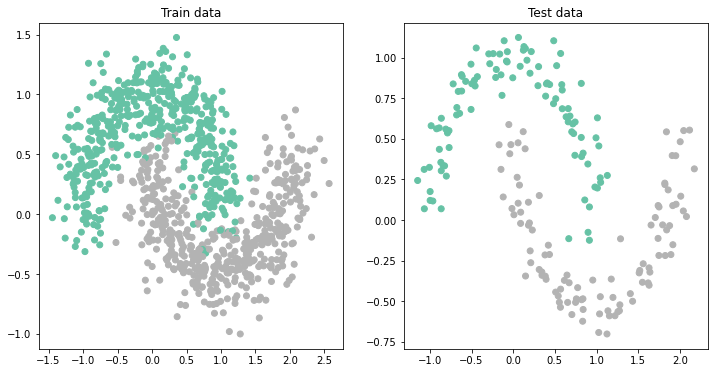

train_size = 1000 # Size of training data

test_size = 200 # Size of test data

X_train, y_train, X_test, y_test, train_loader, test_loader = make_train_test(train_size, test_size,

noise=0.2, batch_size=64)

fig, ax = plt.subplots(1, 2, figsize=(12, 6))

ax[0].scatter(X_train[:, 0], X_train[:, 1], c=y_train, cmap='Set2')

ax[0].set_title('Train data')

ax[1].scatter(X_test[:, 0], X_test[:, 1], c=y_test, cmap='Set2');

ax[1].set_title('Test data');

Train

network = SingleHiddenLayerNetwork(2, 8, 1)

bce_loss = nn.BCELoss()

classifier = LitClassifier(network, bce_loss)

# train the model

trainer = pl.Trainer(max_epochs=1000, log_every_n_steps=10)

trainer.fit(model=classifier, train_dataloaders=train_loader)Loading...

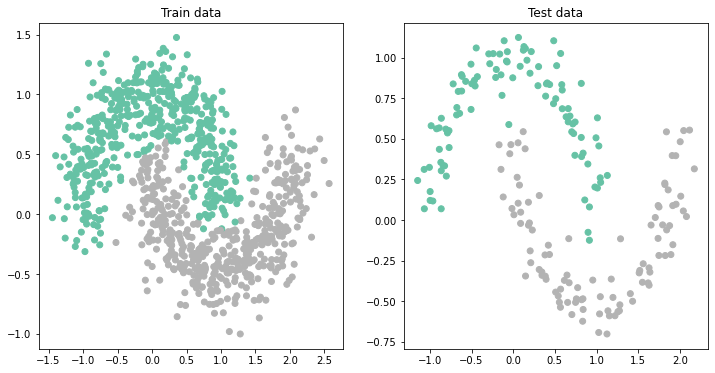

Evaluate

network.eval()

with torch.no_grad():

a_train = network(X_train)

a_test = network(X_test)

print("Test set accuracy: ", accuracy_score(y_test, np.where(a_test[:, 0].numpy()>0.5, 1, 0)))

fig, ax = plt.subplots(1, 2, figsize=(12, 6))

ax[0].scatter(X_train[:, 0], X_train[:, 1], c=np.where(a_train[:, 0].numpy()>0.5, 1, 0), cmap='Set2')

ax[0].set_title('Train data')

ax[1].scatter(X_test[:, 0], X_test[:, 1], c=np.where(a_test[:, 0].numpy()>0.5, 1, 0), cmap='Set2')

ax[1].set_title('Test data');Test set accuracy: 0.99

scooby.Report(core='torch')Loading...